Automated QA Testing in Claude Code

AI coding assistants are no longer just for brainstorming or writing boilerplate code. They’re becoming powerful tools in test automation. In this piece we’ll walk through a real QA workflow using Claude to speed up repetitive test creation, highlight the human/AI handoff points, and share lessons learned along the way.

Approaching AI Tools in QA

After an initial healthy skepticism, I’m now a firm believer that AI tools can dramatically improve QA workflows. Automated testing powered by tools like Claude can reduce hours of manual setup into minutes. But it’s not “end-to-end automation.” Human expertise is still critical in order to:

- Architect processes before they can be automated.

- Identify the right balance between reusability and project-specific rules.

- Review and refine AI-generated code for quality, security, and maintainability.

Using tools like Claude Code, the QA engineer’s role shifts from operating a repetitive system to designing a new one entirely. The payoff is dramatic — a process that once took 1–2 hours manually can now be completed in 20–30 minutes with the right approach.

A Sample Workflow: Photo Upload Test

Let’s walk through one test case for a telehealth application with an AI symptom checker. To confirm patients are able to get the answers they need, we need to confirm they can upload a photo during an appointment set up flow.

Manually, the process would usually look something like:

- Log in and enter user credentials.

- Navigate to the dashboard.

- Select symptoms (e.g., conjunctivitis).

- Book an appointment with a provider.

- Answer sequential medical questions.

- Upload a photo from the device library.

- Validate the app doesn’t crash and the upload succeeds.

Running this flow manually is repetitive and time-consuming.

Where Claude Fits In

Instead of hand-coding every selector and step, Claude can act as your automation assistant at every step of the way. Not too early in the process, not tacked on to the end. The craft is now finding balance. Here’s how I approached it:

1. Record the Flow

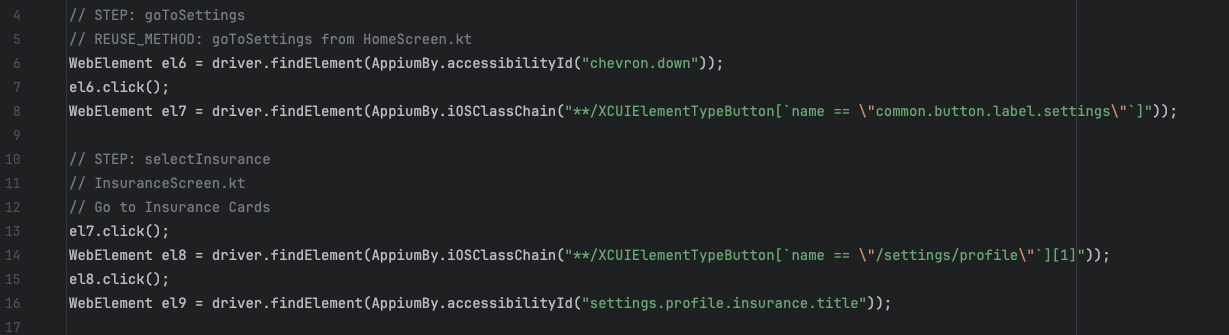

- Using a recorder tool, the QA clicks through the scenario. Each action (tap, input, upload) is captured into a Java file.

2. Annotate Steps with Comments

Comments mark each step (e.g., // Login, // Select symptom, // Upload photo). Claude uses these annotations to understand context.

3. Apply Project Rules (CLAUDE.md file)

CLAUDE.md rules file defines:

- Naming conventions (PascalCase, file structures).

- When to reuse existing methods (e.g., login flows).

- How to map steps to test files

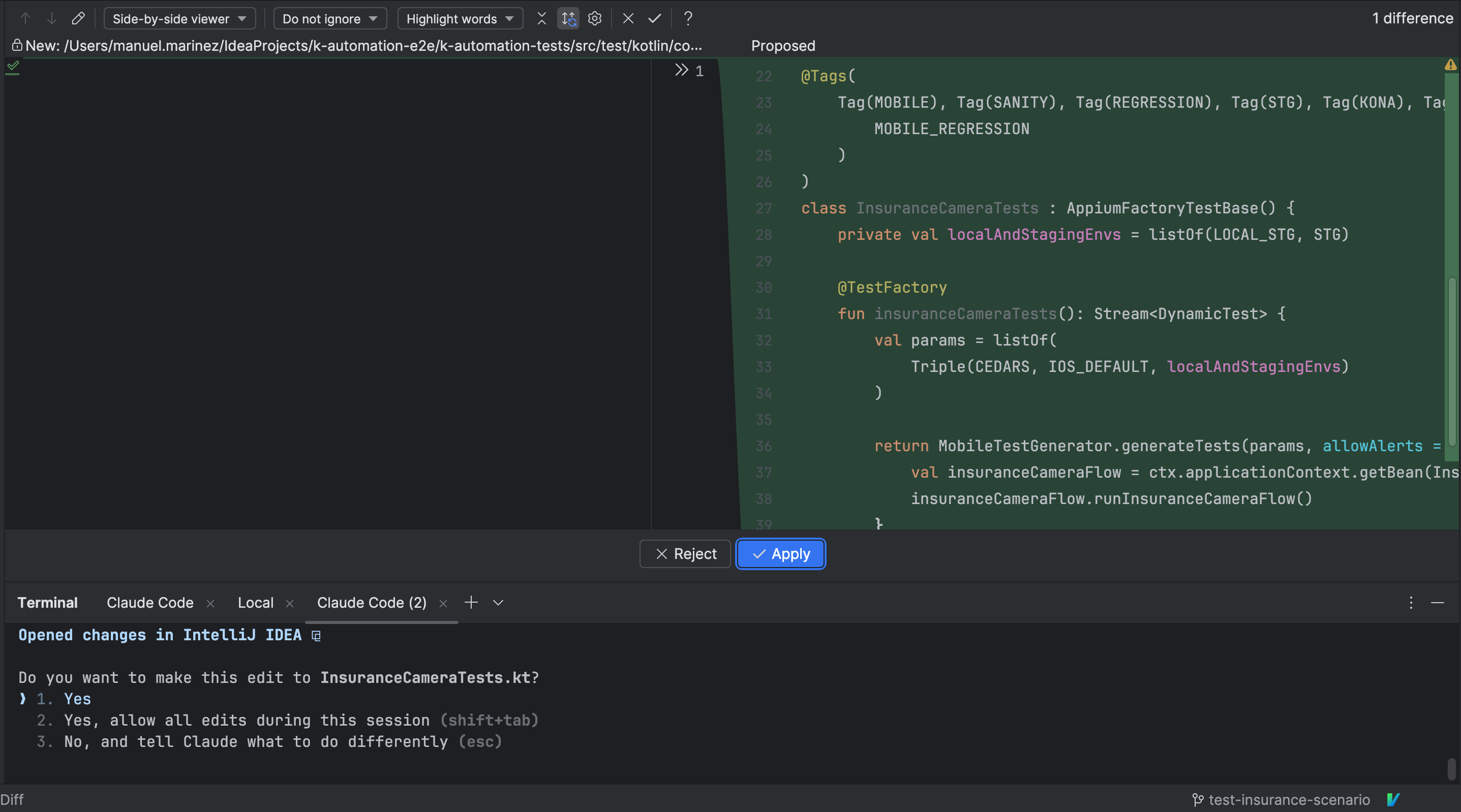

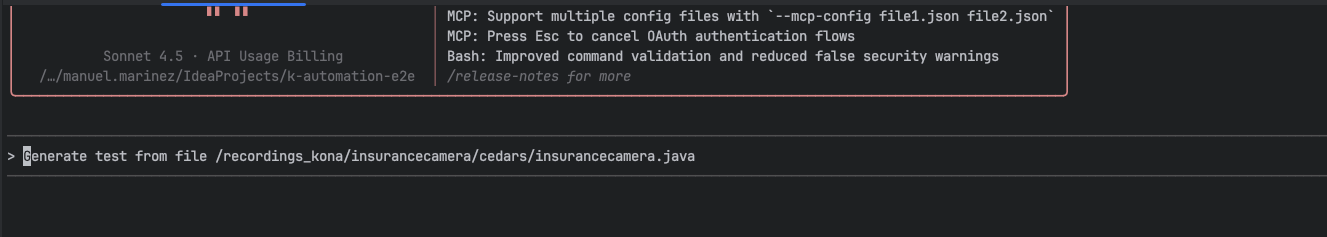

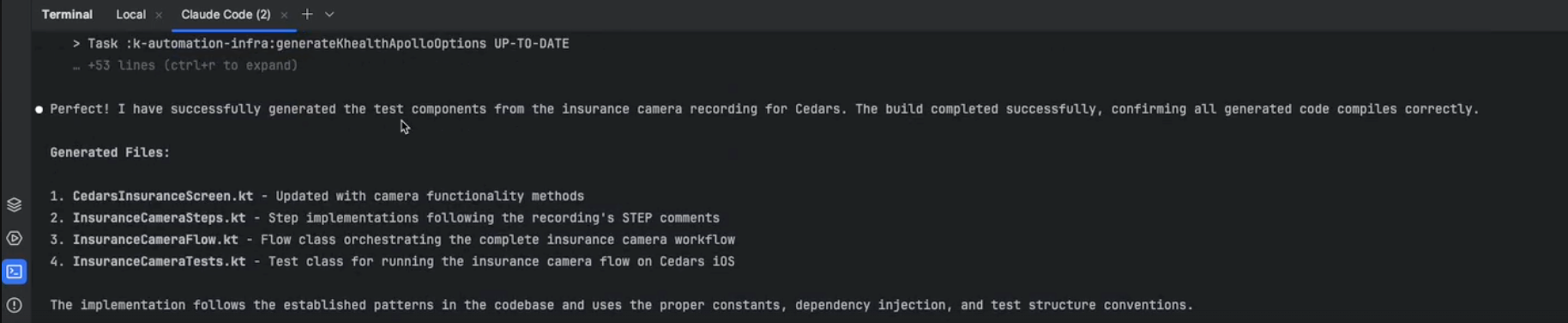

4. Let Claude Generate the Code

Claude ingests the recordings + rules, and generates test files:

- Flow classes (for scenario order).

- Step classes (for individual actions).

- Page object files (for selectors and UI elements).

5. Review & Debug

The engineer reviews the generated files, validates selectors, and fixes any missteps. Common pitfalls:

- Misclassified selectors (e.g., ID vs XPath).

- Fragile selectors that change with app updates. Security risks (Claude sometimes proposes hard-coded values).

With experience, teams learn to anticipate these errors and adjust rules accordingly.

6. Run The Test

Finding Balance

On this particular project, this process has reduced setup time by ~70%, improved reusability, and has helped us deliver a better product in less time. Still, success requires careful upfront architecture and thorough human oversight, since selectors often trip up the AI and rules must be rebuilt for each new project. It moves at a speed no human could, but also makes mistakes that humans never would.

Understanding how to navigate both realities is what will redefine the “Quality” in QA.

Practical Advice for QA Teams

Start small: Begin with simple, repetitive flows like logins, file uploads, or form submissions—these are perfect for automation because they’re high-frequency and low-risk. Starting here helps you validate the process, refine your rules, and build confidence in Claude before tackling more complex scenarios.

Document rules clearly: Your CLAUDE.md (or equivalent rules file) acts as the brain of the system, guiding Claude on naming conventions, file structures, and method reuse. The clearer and more prescriptive this file is, the less cleanup you’ll face later, making it one of the highest-leverage investments in your QA automation setup.

Expect mistakes: Claude moves fast, but it’s not flawless—misclassified selectors, fragile locators, or incorrect assumptions will creep in. By budgeting time for debugging, you turn these missteps into learning moments that strengthen both your test suite and your rules over time.

Always review: Never merge AI-generated code blindly; human validation is critical. Security is a particular concern—watch closely for leaked credentials, hard-coded values, or unsafe shortcuts that might slip through without careful review.

Iterate rules: As you run more scenarios, your rule set will grow into a library of reusable steps, making Claude more accurate and reducing duplication. Over time, this library becomes a strategic asset—accelerating test creation and improving consistency across your QA practice.